In June 2025, the MIT Media Lab released “Your Brain on ChatGPT,” a preprint study that explored how students’ brains respond when writing with and without AI tools. Participants were split into three groups: ChatGPT users, search engine users, and “brain-only” writers who used no external tools.

Using EEG data, researchers tracked how each group engaged cognitively over four writing sessions. The results were striking. Students using ChatGPT showed lower brain activity, weaker memory recall, and less ownership of their writing. Their essays were well-structured and grammatically polished, but they learned and retained less.

The study quickly gained attention in the higher education community. While the study is still awaiting peer review, the early findings reflect something we see often in practice: how AI is introduced into learning environments has a direct impact on the depth and quality of learning.

At Risepoint, we guide faculty to teach students to use AI to support rather than replace thinking

We’ve been guiding faculty through this terrain for two years, and we’re not surprised by MIT’s findings. In fact, they reinforce what we already know from decades of learning science: learning requires struggle, reflection, and a sense of agency. When tools like AI short-circuit that process too early, they can interfere with deep thinking. As AI thought leaders Dr. Philippa Hardman, Dr. Ethan Mollick, and others have noted, the goal isn’t to shut down AI, but to teach students to think in partnership with it. When used thoughtfully, AI can be a catalyst for deeper understanding, not a shortcut around it.

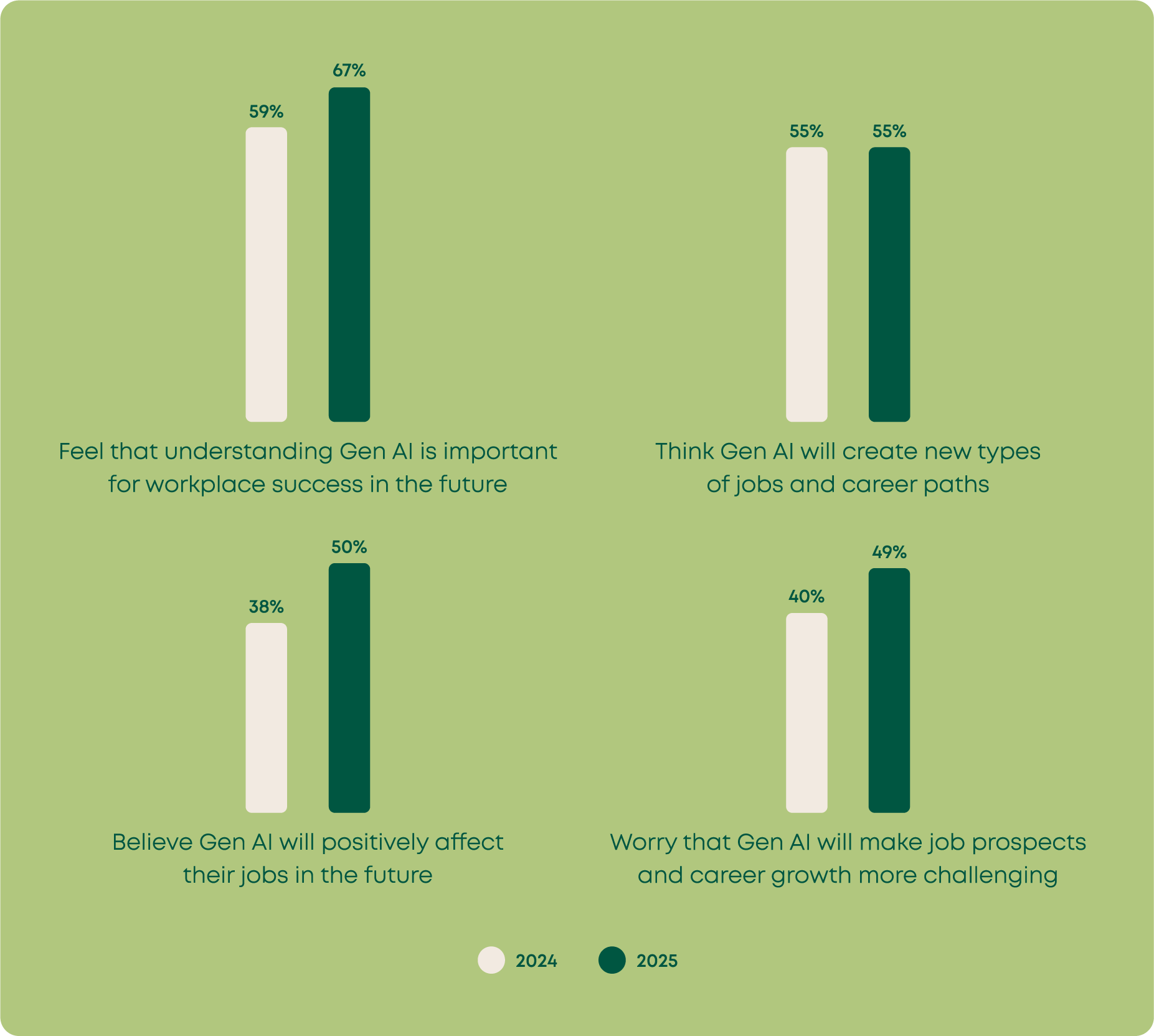

We also know from our Voice of the Online Learner survey that modern learners recognize AI’s growing role in the workplace. They don’t want to be left behind.

That’s why we lean on our Risepoint Design Tenets to help faculty create learning experiences that leverage AI while protecting the integrity of the learning process. Here’s what we recommend.

Five ways to use AI to support learning

1. Delay AI until after the first attempt

Let students brainstorm, outline, or draft on their own first. Once they’ve engaged with the material, AI can be used to refine or critique. This preserves the productive struggle that builds lasting knowledge.

2. Make thinking visible

Require students to submit both their AI interaction and a reflection on how they used it. What did they accept or reject? This cultivates metacognition and ownership, which are key drivers of meaningful learning.

Working with a Risepoint Instructional Designer, one of our partner faculty members designed an assignment for an Advanced Pathophysiology course that blended content mastery with metacognitive reflection. Students were asked to explain how genetic and genomic processes function in the body using course readings and peer-reviewed sources. They were also encouraged to consult AI tools like ChatGPT, CoPilot, or Gemini to support their exploration.

The key design move came next. After developing their response, students completed a short reflection prompt that asked them to identify where AI helped, where it fell short, and what reasoning or clinical insight they added on their own.

This approach made students’ thinking visible. It clarified the boundaries between tool-generated content and student-generated insight, reinforced critical thinking, and allowed faculty to assess both the accuracy of the content and the depth of the student’s engagement. Rather than hiding AI use, the assignment treated it as an opportunity for self-awareness and intellectual ownership.

…how AI is introduced into learning environments has a direct impact on the depth and quality of learning.

3. Use AI as a collaborator, not an author

Encourage students to treat ChatGPT like a peer, tutor, or devil’s advocate. Prompts like “challenge my argument” or “summarize the counterpoint” foster critical thinking, not shortcutting.

In one Healthcare Leadership course, a Risepoint Instructional Designer supported faculty to transform a traditional essay into an AI-powered role-play simulation. Instead of writing a static response, students selected a healthcare role such as a registered nurse or respiratory therapist and engaged in a back-and-forth conversation with ChatGPT using structured prompts. They performed 4 to 6 exchanges, analyzing how communication patterns shifted based on the AI’s responses.

After the dialogue, students reflected on what caused information to be shared or withheld and connected their insights to core course concepts like psychological safety, leadership influence, and organizational culture. The result was a more interactive, applied, and realistic learning experience that better mirrors the complex interpersonal dynamics of healthcare leadership.

This approach keeps students in control of the cognitive heavy lifting while giving them a safe space to test and explore ideas in conversation with an AI collaborator.

Since MIT’s study, GPT has released Study Mode, essentially a collaborative tutor. Our instructional designers stay current by thoughtfully considering innovations like this to enhance learning while supporting cognitive engagement and sound instructional design.

4. Require source-tracing or rephrasing

If AI-generated ideas appear in assignments, ask students to track them back to credible sources or rephrase in their own words. This builds ethical use and reinforces conceptual understanding.

5. Focus on authentic assignments

Design assignments that reflect the types of challenges students will face in the real world. These challenges are often messy, open-ended, and layered. AI can support the process, but the core task should require learners to synthesize, apply, and reflect in ways that are uniquely human. Whether it’s pitching an idea, solving a client case, or writing a policy memo, authentic assessments ensure students are practicing transfer, not just task completion.

In a Computer Information Sciences program, a faculty partner worked with a Risepoint Instructional Designer to build a final project where students created a small software application that connected to a database they had built earlier in the course. Instead of answering quiz questions or completing coding drills, students were asked to simulate a real scenario like building a movie streaming system.

Students used a programming language of their choice to build features for adding, updating, and retrieving data. They also submitted a short video explaining how their app worked, screenshots of it in action, and a written summary that described key choices they made during development. Students were allowed to use AI throughout the project but crucially had to demonstrate deep knowledge and ownership of the final product in the same way they would have to in the workplace.

This kind of assignment helps students show more than just whether their code runs. It gives them the chance to explain their thinking, apply what they’ve learned, and build skills they can use in the workplace. It also gives faculty a clearer view of how well students understand their own work.

…the goal isn’t to shut down AI, but to teach students to think in partnership with it.

Designing with AI is a strategic choice

The MIT study highlights a simple truth. Students learn best when they are cognitively engaged, emotionally connected, and given the right tools at the right time. AI can support that, but only when it’s integrated with purpose and structure. To support this engagement, we recommend that campuses have a strong AI policy and ideally a campus-provided or vetted AI tool for students.

At Risepoint, we don’t ask whether students should use AI. We ask how to design learning experiences that help them use it wisely. With the right scaffolding, students can gain the benefits of AI support without sacrificing ownership, memory, or critical thinking.

That’s not just thoughtful course design. That’s the future of learning, and we’re building it with our partners every day.

Meet the author

Tina Peters

As Senior Director of Instructional Design at Risepoint, Tina leads the vision and strategy for Risepoint’s learner experience. She and her team collaborate with faculty and academic leaders to design courses that support student success and career readiness. Her current focus includes integrating AI tools into course development to boost student engagement and real-world application.

Tina earned a B.S. in Psychology from the University of Illinois Urbana-Champaign and an M.A. in Learning Sciences from Northwestern University. With more than 25 years of experience in online higher education and the training industry, she has expertise in instructional design, user experience, product innovation, and AI. She currently leads Risepoint’s Instructional Design and Quality Review teams.